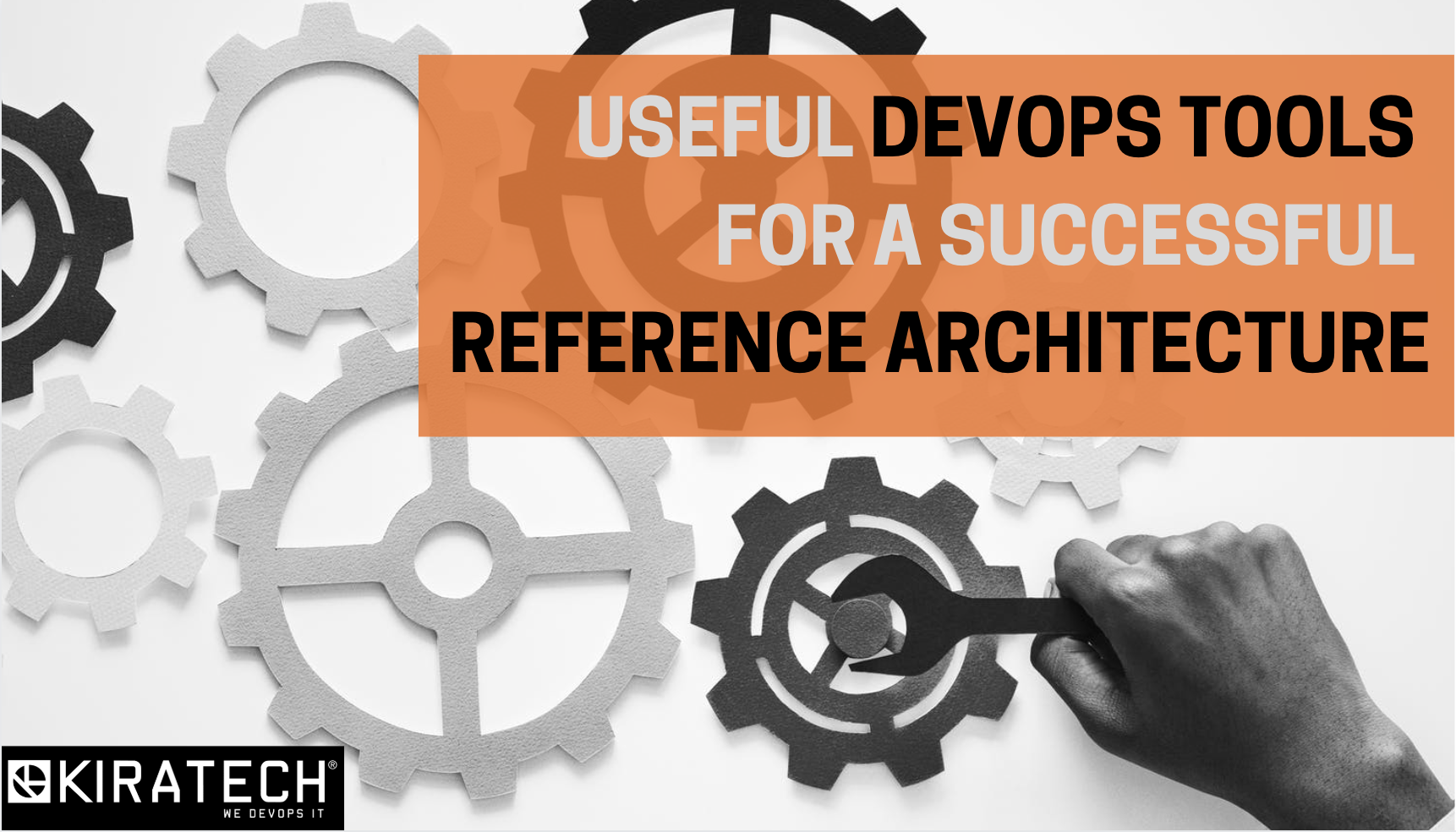

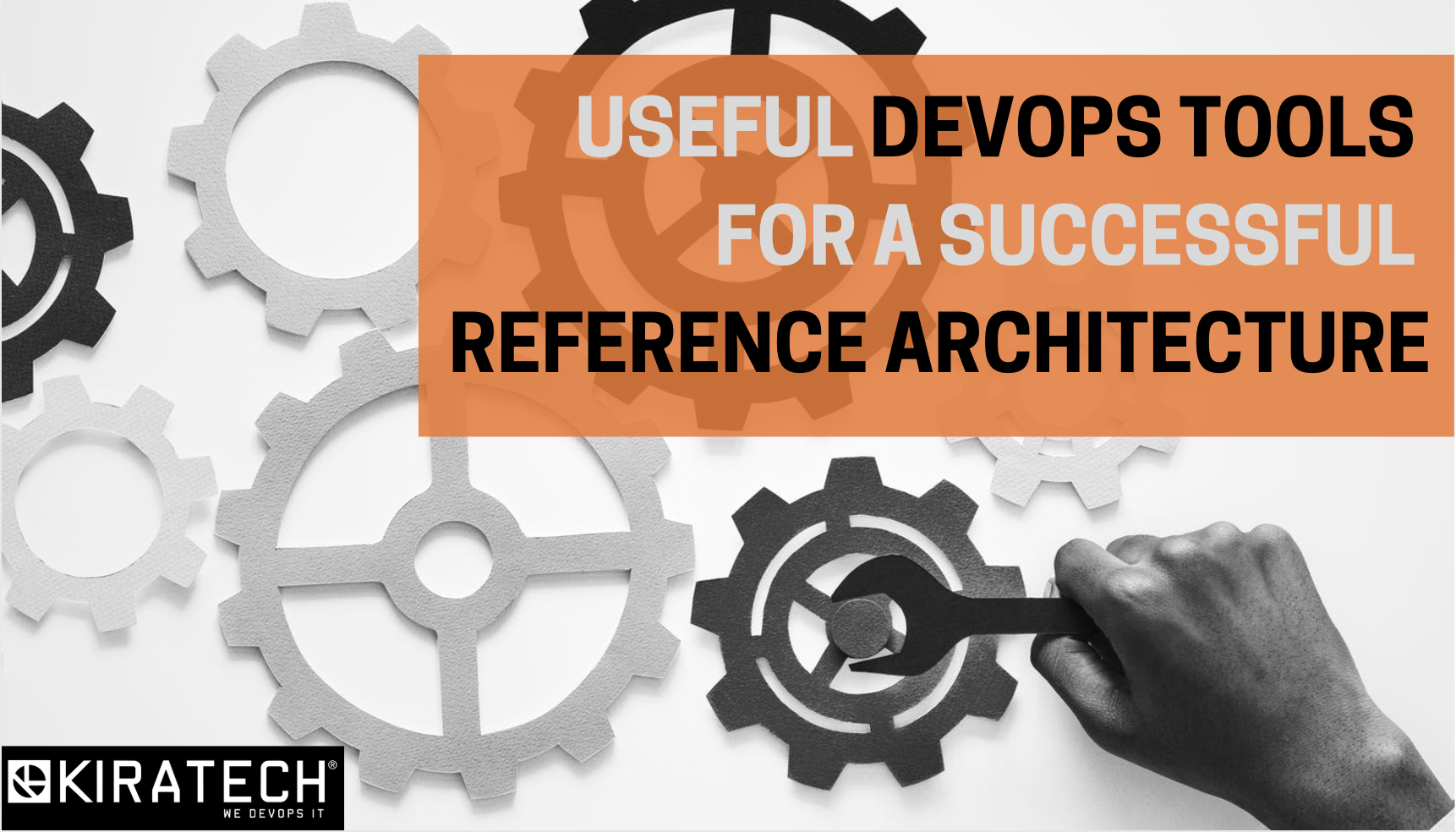

In the previous blog posts, we have deepened concepts like DevOps methodology, Cloud, Security and their connected tools and practices, by explaining how Digital Transformation influenced and radically changed the company’s approach to these three areas with the aim of obtaining a more flexible and agile applicative development.

But how DevOps Tools interact with each other? Which is the role model which firms should aspire to?

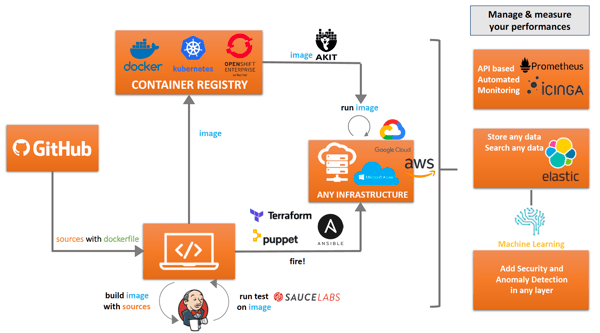

Let’s analyse together what we consider the ideal Reference Architecture, which is the standard that, based on our experience, we believe to be the best to make different DevOps Tools engage in order to implement an automatized, fast, efficient and safe software life cycle.

The architecture we are referring to is the following:

At first glance, it may look like an intricate scheme, but we can guarantee that, once you understand its underlying logic, it may become your reference point as well.

Let’s begin by saying that, before even starting any process, the best thing to do is to define the standards, which are the basic rules and procedures to which refer in the management of the activities.

Thus, let’s imagine that your company or your IT department find themselves to:

- Create a new server;

- Get new containers starting, with inside application;

- Produce and archive documentation.

In order to act in an optimization, automation and efficiency point of view, standard and univocal actions must be identified. These actions must be undertaken every time it is intended to start with this kind of processes. In this case the action must be always the same: make a commit and save the GitHub code, which is the most known and spread platform for the code hosting and for the collaborative development based on Git DVCS (Distributed Version Control System).

In this same perspective, after receiving the input, the process should be defined and addressed through a central tool, which describes its logic and communicates the system how to proceed. Therefore, this transition should involve all the development software phases in DevOps style, from writing to configuration, from test’s stages to distribution and releases’ ones. Jenkins is the tool that we identified for this key role.

Hence, inside Jenkins there will be inserted the so-called pipelines, which will define the process, i.e. the set of the software components drop-down connected, so that the result of one element (output) would be the entrance of the one which immediately follows (input).

We cited so far the two main DevOps facilitators, which ensure that the beginning of the process will be correct and standardized. At the same time, by the way, they need a specific investigation, in order to understand how they act and which are the technologies that stand at their basis.

GITHUB:

GitHub may be identified as a halfway between a social network and a file repository. It is also used for Open Source and private projects.

We may adopt it in order to save technical documentation, the applicative code or the definition file, the infrastructure configuration (hence, not just software as an application), Ansible playbooks and pipelines, all divided in different repositories, according to the involved areas.

As far as the development flows are concerned, GitHub stands its basis on a process called GitHub Flow: this system allows to keep tracks of the software story, in a clear and legible way, helps the growth and permits the teams to divide the efforts on the different implementation, correction, cleaning and release phases, by granting to each of them suitable spaces inside the repository and appropriate schedule inside the flow. All of this is constantly monitored, enabling to rapidly intervene on eventual critical issues at any level of the process.

What stands at the basis of this tool is the concept of TEAM, namely its being extremely cooperative during the code revision phase, claiming several native integrations with other tools. GitHub allows to keep the entire process inside a unique platform, by letting developers work with the instrument they favour, including the ones for continuous integration, hundreds of app and third parties’ services.

Another fundamental functionality that we adopt is that of Project Management (often is not fully exploited), in which we can find a Kanban Board connected to the different open issues or pull requests, that may be transversal between the various projects and each of them may be organized in assignable to one or many team members. During these passages, it is possible to comment, attach file or images, by creating a complete and organized historical, all of it in a Wiki logic, which allows a great collaboration between the different actors. This last point is very crucial for us that wed DevOps and Open Source visions and that is one of the main characteristics for which we recommend GitHub as an excellent solution for our customers. In particular, we propose GitHub Enterprise for bigger realities, i.e. the on premise GitHub version, provided as a virtual appliance performable in cloud or on all the major hypervisor.

One of the most recent and important GitHub news is represented by, besides Microsoft acquisition, the ACTION. They may be combined up to 100 GitHub Action in order to create challenging workflows through definite actions in the repository and, also, they are all highly customizable. Today this functionality is available only in the Limited Public Beta version.

JENKINS:

It is the most famous system for Continuous Integration and Continuous Delivery, which enables to automatize the application delivery life cycle, by increasing the productivity and the quality of the applications. It relies on a master-slave architecture and it is very simple to install and configure.

Also, here we can find the corresponding Enterprise version, i.e. Cloudbees Core, which:

- Thanks to the Continuous Delivery on premise and in cloud functionalities, satisfies specific Enterprise security, scalability and manageability requirements;

- Can be integrated with OpenShift and Kubernetes;

- Includes “Team” functionalities, as GitHub;

- Possesses high analytics, monitoring and alerting functionalities;

- May be very flexible;

- Functions also with non-containerized applications;

- Possesses a very user-friendly web interface.

As explained above, Cloudbees is the central tool that involves all of the software development phases in a DevOps optics. One of the reasons why it is the most used solutions is that of related to the quantity of plugin for an interaction with other Tools (today, more than 1.400).

During September 2018 DevOps World | Jenkins World conference, Cloudbees also announced the actual offering of technological solutions, which includes:

- Clooudbees DevOptics, monitoring and analysis instrument of DevOps performances;

- Cloudbees CodeShip, Cloudbees Core as a Service version;

- Cloudbees Starter Kit, a bundle for teams that want to begin using Cloudbees: it gives access to Cloudbees Core e Cloudbees DevOptics, providing business functionalities such as pipeline automation, DevOps performance monitoring and mapping of the flow value in real time in order to visualize the different phases of the software delivery process. This “kit” also includes a virtual formation and a remote quickstart, which offers new teams the installation and the configuration of Cloudbees Core and Cloudbees DevOptics, with a general management view.

- Cloudbees Apps, a sort of “app store”, in which the community constantly releases “prefabricated” integrations that behave exactly like a Jenkins plugin, for instance, downloading a plugin in order to connect to Git or, also, downloading the application which provides Continuous Integration on Openshift taking data from GitHub: the overall will possess an already prepared integration.

What we discussed about is just the basis of reference architecture, which is our DevOps Architecture “ideal” and involves additional passages and technologies such as:

- Containers, in the case in which the applicative will be based on this type of technology or if there is the desire of making the compilation and execution environments completely replicable and defined by code;

- Configuration management solutions, whenever the classic virtual infrastructure will need an abstraction and automation of the management processes;

- Big data management & analytics for the analysis of data generated by the tools and by the processes and the use of them in order to prevent mistakes and anomalies;

- Machine learning, which enables to identify relevant information inside huge amounts of data and correlate them, in order to be usable to simplify and automatized task.

Follow us in our next blog posts, in which we will specifically deepen these technologies, connecting them to the development flow and to the entire architecture, founding how, by following this scheme, the process would become so precise and controlled that it will reduce the mistake chance to the minimum.

If you want to learn more about the DevOps Tools, download our FREE GUIDE: